1. The Analysis Should Match Observations

- Misconception

- Observed Data vs Model Resolution and Physics: Observed Data

- Observed Data vs Model Resolution and Physics: Accounting for Discrepancies

- Assimilation Cycling

- Accountability in Action

- Test Your Knowledge

- Reality

1. The Analysis Should Match Observations » Misconception

In trying to produce the best possible NWP forecast, you might think that the model initial analysis should match exactly the observations used in that analysis, but you would be wrong.

Let's explore what's behind this counter-intuitive misconception.

The initial analysis comes from a complicated combination of the observations and a short range model forecast called the trial field (also referred to as the first guess field), and is designed to provide the best possible starting point for the forecast model. The analysis must account for such factors as differing accuracy of the various observing systems, the possibility of incorrect observations, and the relative importance of the trial field and the observations. The analysis must also be consistent with the model's own resolution and its own physics. For these reasons, the model initial analysis will differ somewhat from the observations.

1. The Analysis Should Match Observations » Observed Data vs Model Resolution and Physics: Observed Data

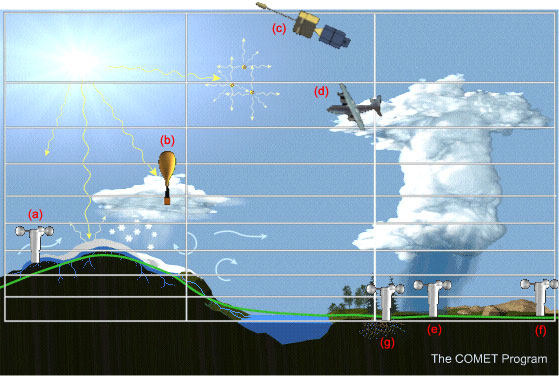

To illustrate why this is necessary, let's take a look at an example of several different observation types within a typical model grid. In the illustration we have a two-dimensional cross section of the three-dimensional model grid: the grid is composed of a multitude of rectangular boxes. Within the sample area enclosed by the grid, we find a number of different observing systems:

(a) A surface observing system (for example, from the synoptic network) where there is a sub-grid scale gradient in snow cover and topography, as well as in surface temperature.

(b) A radiosonde ascending through a sub-grid scale snow shower.

(c) A satellite measuring a particular radiance from an atmospheric layer which is thicker than the height of the individual grid boxes.

(d) An airplane measuring the sub-grid scale wind at the level of a cumulonimbus anvil.

Three additional surface observations in the same grid box:

- (e) one in the convective rain shaft;

- (f) one in the pre-convective area ahead of the cloud;

- (g) one in the post-convective area behind the cloud.

In general, the resolution of observations in the real atmosphere is different from the model's resolution, which means that features resolved by the model are "different" in some sense from the features resolved by the observing systems.

1. The Analysis Should Match Observations » Observed Data vs Model Resolution and Physics: Accounting for Discrepancies

In some cases there are features that the model can't resolve, but that the observations can. In other cases, the observing system samples a volume larger than the grid box resolved by the model. Furthermore, we mustn't forget that the trial field is always used in the analysis. The analysis considers the trial field to be, essentially, another type of data, with values available on the full three-dimensional grid.

Let's discuss some of these ideas in more detail:

- (1) Sample resolution in the atmosphere versus model resolution:

- The satellite measures average radiances over a deep layer in the atmosphere, while the model actually has better vertical resolution than the satellite is capable of sensing.

- (2) Consistency with model physics:

- The analysis will add moisture to reflect the observed humidity at an observation such as the one in the rain shower. However, if the model atmosphere tends to be too dry, then the model will simply dump out a lot of moisture during the first few time steps to dry itself out to the level required by its own physics.

- (3) Grid boxes containing no observed data:

- Numerous grid boxes (over oceans, polar regions, and very high in the atmosphere, for example) will have no observed data at all that can be used for the analysis. Such data voids are filled by the analysis with trial field values.

- (4) Conflicting or erroneous observations:

- Erroneous observations do occur, and may or may not be rejected by the analysis, depending on rejection criteria. Flagrantly incorrect observations are easy to spot and reject, but smaller errors are harder to spot. Even if the observed data are correct, a single grid box can easily contain conflicting data. For example, the three observations in and around the rain shower all lie in the same grid box, but are conflicting as far as the analysis is concerned because they are measuring sub-grid scale phenomena.

- (5) Observation error:

- Every observing system has some inherent observation error. Some systems have greater errors, and some less, so the analysis gives greater or lesser weight to observations from different observing systems. An observation judged to have sufficiently large error will in fact be ignored, and have no influence at all on the analysis.

- (6) Vertical structure:

- Meteorological systems have vertical structure, which must be correctly represented in the analysis. Suppose we have surface observations which show the presence of a low pressure center, without corresponding upper level data. The surface observations by themselves in the analysis may lead to a poor forecast, since in that case the model will tend to weaken the low if it's initial conditions do not define the supporting upper-level structure.

- Mass-wind relationships:

- Mass-wind relationships are of great importance in the analysis, because they determine the ageostrophic flow, which in turn can be related to significant vertical motions. The observed mass field, represented by geopotential heights, defines the geostrophic wind which, when subtracted from the actual observed wind at the same location, gives us the ageostrophic wind at that location. Therefore, the mass-wind relationship is best defined from independent but co-located observations. Radiosondes provide such observations in any atmospheric column. Satellites, on the other hand, provide mass observations only where skies are clear, and wind observations only at cloud-top levels where cloud motion can be tracked. These observations are not co-located.

In summary, various data types with varying characteristics and geographic coverage are combined with the trial field to produce the objective analysis. It is to the forecaster's advantage to know how the analysis has been affected by the presence (or absence) of the various types of meteorological data, and by the quality of the trial field: a good forecast is unlikely if there are weaknesses in the initial analysis.

1. The Analysis Should Match Observations » Assimilation Cycling

A high quality meteorological analysis can not be obtained without the use of assimilation cycling. In this process, the analysis combines a short-term model forecast (the trial field, or first-guess field) with all available observations: in effect, the observations are used to make small corrections to the short-term forecast.

Let's illustrate the process of assimilation cycling with a graph. The vertical axis shows the state of a particular atmospheric variable (temperature, wind, etc) at a given grid point, while the horizontal axis shows the time. The graph therefore illustrates the variable's evolution with time.

The red line indicates the "true" or "best" state of the variable in the analysis, given the model's resolution and physics. The pink region represents the "zone of truth," which corresponds to the allowable range of values given the observation density and error.

Before each analysis time, the model makes a short-range forecast valid at the time of the analysis. This is the trial field. As the trial field forecast values of the model variables change with time, they may move out of the "zone of truth." The job of the analysis is to use the observed data to bring each variable back into the acceptable range, resulting in an initial analysis which will serve as the basis for the next model forecast.

One major advantage of assimilation cycling is that good information from previous analyses is retained, and so made available for future forecasts. This is particularly important in data-sparse areas. For example, an area in the middle of an ocean may have a couple of ship reports at 12Z which define a low-pressure area. This information is then carried through to 18Z via the trial field. If there are no observations at all in that area at 18Z, the analysis will still "know" about the low at that time because of the assimilation cycling procedure. This means that the analysis is dependent to some degree on the quality of the NWP model used to create the trial field. If the short-range forecast is bad, then the corrections made by the analysis may not be sufficient to bring model variables back into the "zone of truth." This can lead in turn to more bad forecasts.

In summary, the assimilation cycling process is designed to create a four-dimensional representation of the state of the atmosphere in which analyzed values of atmospheric variables are consistent with the physics, dynamics, and numerics of the NWP model used in that process The goal is to extract as much usable information as possible from all the available observations, while avoiding inconsistent information which might corrupt the analysis.

1. The Analysis Should Match Observations » Accountability in Action

Now let's look at an example of how observations are used to correct the trial field in the analysis process. In black are wind barbs of the changes that the analysis made to the trial field 250 hPa winds, as a result of taking into account the available observations.

The difference between observed wind and trial field wind can also be calculated. These differences are plotted as red wind barbs at each observing site.

If the analysis were to match perfectly the observations, then the red and black wind barbs would match perfectly. In practice, this rarely happens. Instead, the analysis under-corrects. Changes to the trial field are generally toward the observed wind, both in speed and direction, but are smaller than would be required to match the observations perfectly.

For example, you can see that the wind speed is under-corrected, but the correction is toward the observed value, at stations such as Omaha, Minneapolis, Amarillo, Midland, and Little Rock (blue circles).

Now consider Peach Tree City, near Atlanta (yellow circle), where something unusual happened: the observation shows that the trial field was off by 50 knots. This difference is very large and therefore the analysis assumed the observation was incorrect and so ignored it. As a result, the correction to the trial field shows a light wind in the opposite direction to the observation.

Such a situation is uncommon. If the observation is actually correct, then the analysis will be incorrect, and a forecast bust may occur. In this particular case the NWP models missed a blizzard which occurred after the time of this analysis.

Usually, though, observations are used by the analysis with under-corrections as described earlier. This can have major implications for rapidly-evolving systems, or systems that are entering the radiosonde network for the first time, such as those coming in from the Pacific. The trial field will often underestimate the true intensity of such systems, and so it can take several assimilation cycles before the analysis catches up with their true structure.

1. The Analysis Should Match Observations » Test your Knowledge

Which procedures would be useful to asses the model's initial conditions?

The correct answers are a), c), d) and e)

a) The trial field is an integral part of the analysis, and so weaknesses in the trial field will be reflected in the analysis, especially in data-sparse areas.

b) Many data rejections will indeed be due to incorrect observations. However, it is also possible that correct observations be rejected by the analysis.

c) Patterns in the satellite imagery reflect current atmospheric conditions, and so comparing the imagery to the analysis can help determine whether or not the analysis is on the right track.

d) Assuming correct observations, large differences between the analysis and the observations will indicate areas of the atmosphere whose structure is not well-defined by the analysis.

e) High-resolution numerical models can produce spurious features on a scale smaller than that of the observing network. These features may show up in the analysis through the trial field, and their presence may contaminate the following NWP model forecast.

f) The analysis must retain internal consistency with the physics, dynamics, and numerics of the model used in the assimilation process. Sub-grid scale processes by definition cannot be resolved by the model, so the analysis will generally not carry the details of those processes. Clearly, the forecaster must be aware of such missed details when issuing a short-range forecast.

g) Large differences in observations may be due to one or more erroneous observations. If the observations are correct, then the differences are probably due to measurement of small-scale features not representative of the average of the grid box. In either case, the analysis is produced according to its own rules governing data rejections and the use of the observations and the trial field. While the analysis may have weaknesses in such cases, it may also produce the best possible representation of the atmosphere given the model's own dynamics, physics, and numerics.

1. The Analysis Should Match Observations » Reality

That the analysis should match observations is a misconception.

In summary:

- The initial analysis should not necessarily look exactly like the observations.

- In some cases there are meteorological features that observations can resolve, but that the analysis can't.

- The NWP model provides data for the analysis via the trial field in the assimilation cycling process

- In most cases the analysis under-corrects for discrepancies between the observations and the trial field.

- Observations with large discrepancies are generally ignored. In the rare case in which such observations are actually correct, ignoring them may result in a bad forecast.

These characteristics of the assimilation and analysis processes must be considered by the forecaster in his assessment of numerical model forecast guidance.

To learn more about this topic, visit: Understanding Data Assimilation: How Models Create Their Initial Conditions, a module that is part of the NWP Distance Learning Course.

Understanding Data Assimilation: How Models Create Their Initial Conditions

2. High Resolution Fixes Everything

- Misconception

- Grid and geophysical field resolution can affect the forecast

- When higher resolution helps the QPF

- Do mesoscale models guarantee better forecasts?

- Test Your Knowledge

- Reality

2. High Resolution Fixes Everything » Misconception

In trying to produce the best possible NWP forecast, you might think that the model initial analysis should match exactly the observations used in that analysis, but you would be wrong.

Let's explore what's behind this counter-intuitive misconception.

The initial analysis comes from a complicated combination of the observations and a short range model forecast called the trial field (also referred to as the first guess field), and is designed to provide the best possible starting point for the forecast model. The analysis must account for such factors as differing accuracy of the various observing systems, the possibility of incorrect observations, and the relative importance of the trial field and the observations. The analysis must also be consistent with the model's own resolution and its own physics. For these reasons, the model initial analysis will differ somewhat from the observations.

2. High Resolution Fixes Everything » Grid and geophysical field resolution can affect the forecast

A snow event in southern New England on December 30, 2000, demonstrates that running a higher resolution model doesn't always lead to a better QPF.

The verification of storm total precipitation shows a region of heavy snow with amounts in the range of one to one-and-a-half inches of liquid equivalent over southern New York and northern New Jersey. There were even some small areas over southern New York where observed maximum snow amounts exceeded 1.5 inches of liquid equivalent.

The AVN model, with a horizontal resolution of 80 kms provided a forecast of amounts in the one to one-and-a-half inches liquid equivalent range, centered on western Long Island. The model had the right idea, but forecast this area a little too far south and east.

The ETA model, with a 22 km horizontal resolution, placed its area of heaviest precipitation even farther south than the AVN. Comparing the two forecasts with the verification, it is clear that the AVN QPF was better than that of the ETA for this case, despite the fact that the ETA's resolution was almost four times better than the AVN's.

Why did this happen? To answer that, let's take a look at the same event using an experimental ETA run, which incorporates a high-resolution sea surface temperature analysis. We see that the QPF from the experimental run was much improved from the operational ETA QPF.

When using the coarse operational SST analysis, the ETA model did not perform as well as the AVN. The ETA forecast improved only when it used a higher-resolution SST which more closely matched the ETA's grid resolution. The extra information led to a better QPF. The AVN model's resolution better matched that of the coarse SST analysis, and forecasters would have been better off trusting its QPF in this case, despite the operational ETA model's higher resolution.

In summary, in this case higher model resolution did not "buy" a better forecast. Rather, it just increased the ETA model's sensitivity to the sea surface temperature. The higher-resolution SST had a significant positive impact on the ETA model's QPF. The AVN, on the other hand, with its lower resolution, performed reasonably well with the operational coarse SST analysis.

2. High Resolution Fixes Everything » When higher resolution helps the QPF

Now let's take a look at a scenario in which a higher-resolution model does lead to a better precipitation forecast.

Here we are looking at potential flooding in southern California on February 23, 1998, as strong on-shore flow meets a topographical barrier. The observed rainfall was very heavy in parts of Los Angeles, Ventura, and Santa Barbara counties, with amounts exceeding 8, and even 12 inches in places.

Comparing the QPFs of the 29 km ETA and the 10 km ETA with the observed precipitation amounts, it is easy to see that the higher resolution QPF matches the verification chart more closely.

Why is this so? In the previous example we saw that higher resolution did not lead to a better forecast.

The answer lies in the resolution of the topography and the fact that a well-forecast synoptic scale flow is interacting with that topography. This is a a best-case scenario for gaining value with a higher-resolution model. Looking closely at the model topography, shown in thin grey lines on the two forecast charts, you can see that much more detail is visible at 10 kms resolution than at 29 kms.

In both cases the topography is forcing vertical motion and therefore precipitation in the strong southwesterly flow, but the forcing differs according to the detail of the topography. The higher-resolution model does a much better job in locating the areas of heavier precipitation. The amounts are still underforecast—by 4 inches in some cases—but the areas of heavier rainfall closely match the observed pattern. The 10 km resolution forecast is a very useful one.

In this situation we have a strong synoptically-forced flow moving over well-defined topography. Furthermore, there are no significant secondary effects, such as mountain valley circulations or sea breeze circulations.Given these circumstances, higher model resolution can generally be expected to lead to a more accurate forecast of precipitation.

2. High Resolution Fixes Everything » Do mesoscale models guarantee better forecasts?

Modern very high-resolution NWP models with highly sophisticated microphysics are available to the research community. Would the operational use of such models automatically lead to better forecasts?

Let's take a look at an example of this by reviewing a high-resolution post-event model run for a summer severe weather case in which a tornado occurred near Oklahoma City in May, 1999.

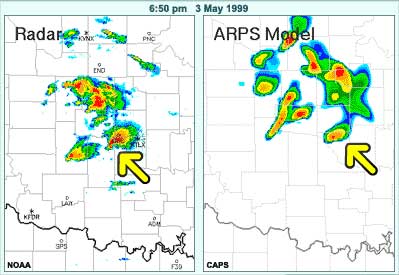

The sequence of images presents the observed radar reflectivities from 5:10 through 8:50 in the evening of May 3, along with model-simulated reflectivies at those times, based on the non-operational ARPS model. This model features 3-km horizontal resolution, accounts for ice microphysics, and includes a variety of other sophisticated components. The model was initialized with radar data after the first convective cells formed earlier in the day.

The model forecast and the observations are similar in terms of the general area in which convection is taking place. However, if we take a closer, frame-by-frame look and focus on county lines, we can see that the model is not accurate in the details of location and intensity of the convection. Such details would be necessary to produce accurate warnings.

For example, at 6:50 a tornado is occurring here near this impressive hook echo while the ARPS model is showing the cell to be weakening and already off to the northeast.

Clearly, high-resolution models such as the ARPS will not always provide better forecasts. One practical reason for this is simply timeliness: output from such models is not available soon enough to be useful in forecasting. For example, in this case the model had to be initialized with radar data from the first developing cells, and its forecast output was available much later than that.

More importantly, we must recognize basic limitations which are inherent in very high-resolution models. Observational data used by such models may not always be available at the required resolution, so the initial analysis may "fill in the holes" with incorrect details. However, a correct convective forecast requires that the model's structure of the atmosphere be "just right". This includes the atmospheric moisture, which is highly variable and notoriously difficult to analyze. Moreover, it's not enough to have perfect upper-atmospheric structure in the model: boundary layer and surface variables and processes, such as temperature, moisture, mixing, vegetation, soil types, and evaporation determine in part the instability and so the convection. These are modelled to a greater or lesser degree, but a given model may not include a full treatment of all these elements. Finally, numerical representations of precipitation processes through model physics packages are unlikely to be perfect. For example, outflow boundaries, which often initiate new convection, may be missed in the model. For these reasons, there is no guarantee that any model, even with very high resolution, will forecast convective precipitation in the right general area, and even if it does, it is unlikely to handle correctly all the details of location and intensity of the convection.

Limitations of High-Resolution Models:

- The analysis may fill in data "holes" with incorrect details.

- Correct forecasts of convection rely on accurate depiction of upper-atmospheric parameters, some of which are highly variable and so difficult to analyze.

- Important boundary-layer and surface variables may not be modeled well.

- Precipitation processes are unlikely to be represented perfectly.

2. High Resolution Fixes Everything » Test your Knowledge

Which of the following statements summarizes a message you should take from the discussion about this misconception?

Discussion:

The correct answers are b) d) and e)

a) A large-scale flow can be well-defined even with low-resolution data. Such a flow can interact with the detailed topography of a mesoscale model to produce more accurate forecasts of wind and precipitation than would be possible with a lower-resolution model.

b) Sharp features in fields such as sea surface temperature or vegetation can affect the forecast at scales which can be handled by higher-resolution models; such sharp features are lost in lower-resolution analyses.

c) If the assimilation is handling smaller-scale features correctly and if the model physics for those scales is adequate, then it is possible that the higher resolution model will produce a better forecast.

d) Terrain has a direct effect on some variables such as precipitation and wind. More-detailed model terrain can clearly interact with the atmospheric flow in certain situations to produce a better forecast.

e) More data means that the analysis takes longer to run. If the horizontal and vertical resolution are increased, then the model time step must be shortened in consequence, and model execution time increases because of the higher resolution in space and time. Finally, higher-resolution output means bigger files, which result in slower dissemination.

2. High Resolution Fixes Everything » Reality

Running models at higher resolution will NOT always lead to more accurate forecasts.

One of the main things to keep in mind is that all components of the model function synergistically. This means that higher resolution works best when the model also includes improved and more realistic physics packages, and more detail in the surface specifications of fields such as soil, vegetation, sea-surface temperature, and topography. High-resolution data must also be available, and the data assimilation system must handle those data correctly at the resolution of the model. In fact, the question of data and data assimilation is probably the biggest factor in obtaining improved forecasts from high-resolution NWP models.

In summary:

- The model should be able to take advantage of surface fields at a resolution comparable

to its grid resolution.

- Realistic physics packages must be incorporated into the model.

- Availability of high-resolution data and correct data assimilation are of highest importance.

To learn more about this topic, visit: Model Fundamentals - version 2 and Operational Models Encyclopedia. Both are modules of the NWP Distance Learning Course.

3. A 20 km Grid Accurately Depicts 40 km Features

- Misconception

- Resolving Small-Scale Features

- Weather Features as Waves

- Larger-Scale Features in Numerical Models

- Understanding Degradation

- Test Your Knowledge

- Reality

3. A 20 km Grid Accurately Depicts 40 km Features » Misconception

With the help of high-resolution models you can improve the odds of making a perfect forecast, even for small-scale features. For example, high-resolution models will help you pinpoint the greatest concentration of lake effect snow, and they will provide an accurate forecast of the effects of downslope winds on regional temperatures. Right?

Not so fast! These ideas stem from a common misconception about NWP forecasts.

In any numerical model, features that span only 2 or 3 grid points are never well resolved. A high-resolution model with a 20 km grid will not resolve a 40 km feature with any accuracy. In fact, it will take a model resolution of less than 10 kms to do an adequate job, and even then it will not be accurately represented for very long into the forecast.

3. A 20 km Grid Accurately Depicts 40 km Features » Resolving Small-Scale Features

Let's look at some typical small-scale features and see how they are resolved by a high resolution model.

Here is a convergence/divergence couplet associated with a cold front. The grid spacing, ΔX, is 50 kms. The clouds associated with the large region of low-level convergence—feature A—will show up in the model fields. On the other hand, smaller features in the divergence region, such as features B, span less than 4 grid points and will not be properly resolved.

The convergence zone features are carried in the 50 km grid, but this does not mean that they are well resolved, or that their evolution can be accurately forecast. Let's take a look at why even a model with a 20 km model grid would have difficulties with these features.

3. A 20 km Grid Accurately Depicts 40 km Features » Weather Features as Waves

Atmospheric features in numerical models can be represented in wave-form. Here is a simplified 4ΔX representation by a single sine wave of an observed frontal precipitation band associated with a convergence/divergence couplet.

The blue diamonds show each grid box's average value for the feature, and the yellow line shows its resulting representation in the model.

The size of the feature in relation to the grid is 4ΔX. In this example, ΔX equals 20 kms, the resolution of the model in terms of grid-point spacing. The feature is 80 km in length and is represented as a 4ΔX wave.

What we see is that the wave in the model—the yellow line—has roughly only 2/3 the amplitude of the actual wave, as well as a blocky appearance not found in the original feature. The wave is visible, but the details are not well represented.

Problems arise if we use this depiction to make a forecast. Let's put the model in motion and see how its representation of the feature diverges from the actual feature over a short period of time.

During 170 minutes, the observed feature moves one full wavelength. The model's forecast, however, shows substantial phase lag. The model wave becomes broader and loses amplitude. The result is a poor forecast of this particular feature.

3. A 20 km Grid Accurately Depicts 40 km Features » Larger-Scale Features in Numerical Models

How does this compare to the way the model handles a larger scale feature? For instance, one that spans 10 grid points. Let's take a look.

This wave represents a 10ΔX feature, for example a moisture plume spanning 200 km on the same 20 km grid. The model's representation of this feature is far more refined than that of the 4ΔX case.

Putting the model in motion, we see that the 10ΔX wave retains much better definition of this feature's phase and amplitude over the same time period. Overall, the forecast of this feature is much better than that of the 4ΔX wave.

The details of phase lag, wavelength broadening, and amplitude retention differ from model to model, but there are no models that will accurately reproduce a 4ΔX or smaller feature. A 10ΔX feature, however, will be well represented, and even features spanning as few as 6 to 8 grid points will initially be fairly well represented. But, as we will see in the next example, even features of that size will deteriorate over time in the model forecast.

3. A 20 km Grid Accurately Depicts 40 km Features » Understanding Degradation

We can get a better understanding of why the analyses degrades with time by looking at the wave equation used to represent a feature. Here we are looking at a 7ΔX feature, a mesoscale vortex spanning 140 km on a 20 km grid.

The initial representation compares favorably to the actual feature. After putting it in motion we see that the wave degrades with time. It falls behind the actual feature, loses amplitude, and creates the beginnings of a small trailing wave.

This simple forecast equation shows the relationship between the local rate of change of the variable T and its advection by the wind. From the equation, we see that the advection term contains the gradient of T, which must be accurately represented as a spatial finite difference in the model in order to be well forecast by that model.

If we look at the third grid point, we see that the model gradient—the slope of the green line between the second and fourth grid points—deviates from the actual gradient (blue line) at that point. In fact, the actual gradient, which is the slope of the wave at grid point 3, is steeper.

In running the model, the discrepancy between the represented gradient and the true gradient quickly becomes larger and the forecast degrades from reality.

So the more grid points available—the smaller the ΔX—the more closely the model can represent atmospheric features and in turn maintain a more accurate forecast. With a larger ΔX, there are fewer grid points to represent the feature, and as a result the forecast degrades faster.

3. A 20 km Grid Accurately Depicts 40 km Features » Test Your Knowledge

Terminology for these questions:

Resolved means that the feature exists initially in the numerical model at roughly the right scale, location, and amplitude, but not necessarily with enough definition to be accurately forecast by that model. Features as small as about four grid lengths will be resolved in an NWP model. If the feature spans fewer than four grid points, it will be "aliased," which means it will be misinterpreted as having a longer wavelength than it really does.

Well forecast means that the feature is resolved with enough definition in the model's initial analysis that it will be carried forward in time with reasonable accuracy in the subsequent model integration. A feature will be well forecast if it spans at least 8-10 grid points.

Question 1

Suppose you are working with a numerical model that has a 12 km grid.

Question

Would a 500 mb short wave be resolved? Would it be well forecast?

Discussion:

A short wave is a dynamic feature that exists and moves within the atmosphere at various levels. A 500 mb short wave can be thought of in terms of the wave equation that was studied earlier in this session. Typical 500 mb short waves exist on a horizontal scale of hundreds of km, so in a 12 km model they will be more than simply resolved; they will be well forecast.

Question 2

Suppose you are working with a numerical model that has a 12 km grid.

Question

Would a lake breeze on the Lake Superior coast be resolved? Would it be well forecast?

Discussion:

Features such as lake and sea breezes and valley winds have their origins in terrain or in land-sea differences, but also have a dynamic component in the atmosphere once they are set up. A lake breeze on the Lake Superior coast could be quite long along the coast, but its scale perpendicular to the coast might be on the order of tens of km inland, and tens of km offshore. Willet and Sanders (Willet, H. C., and F. Sanders, 1959: Descriptive Meteorology. Academic Press. 355 pp.) state that "by late afternoon, the sea breeze reaches its broadest extent, sometimes extending as much as 30 miles inland and 30 miles out to sea." This horizontal scale of 60 miles, or approximately 100 km, appears to be a maximum for a sea breeze. Assuming a lake breeze with a width of 50 km (spanning about four grid points) at the initial time of the 12 km model, then such a breeze would be resolved (assuming good model definition of the land-sea boundary). This breeze is too small to be well forecast in the model, though. Breezes from smaller lakes, or the initial stirrings of the Lake Superior lake breeze, would have much smaller widths than 50 km. A lake breeze with a width of 20 km at initial analysis time would span less than two grid lengths of the model, and so, while the breeze would likely exist within the model, it would be aliased and incorrectly represented initially. Such a breeze would be neither resolved nor well forecast by the model.

Anecdote

"Some of you might remember the LFM model which was used in the 80s and early 90s. Its horizontal resolution was coarse by today’s standards, with a grid spacing greater than 100 km. This model would at times produce lake breezes for both Lakes Superior and Michigan. These model breezes would then converge over Eau Claire, Wisconsin, and the resulting vertical motion could in turn lead to model forecast precipitation there. This was totally unrealistic; the model did include lake breezes, but was wrong in the details of their sizes and locations. Current higher-resolution models will handle lake breezes better than the LFM, but still can not be counted on to provide correct details of their sizes and locations."

Question 3

Suppose you are working with a numerical model that has a 12 km grid.

Question

Would orographic precipitation related to a strong southwesterly synoptic flow from the Pacific onto the BC coast be well forecast? At what scale?

Discussion:

Orographic precipitation is a feature directly linked to the topography, through its interaction with the prevailing synoptic scale flow. Except for this interaction, there are no atmospheric dynamics that cause the precipitation area to move or intensify. The precipitation will always coincide with the topography. As a result, if the synoptic flow is handled correctly, then the orographic precipitation will be well forecast down to the limit of the model's grid spacing and its topography (12 km resolution). In such cases, the feature need not span 8-10 grid points to be well forecast.

3. A 20 km Grid Accurately Depicts 40 km Features » Reality

To summarize, high resolution models will not resolve small-scale features. Such models must have a resolution that is appropriate for the scale of the features that are to be included in the numerical representation.

The most important factor in how well any atmospheric feature is resolved is its size compared to the model's grid spacing. Features that span less than 8 to10 grid points, although fairly well represented initially, will quickly degrade in any model. Small-scale features, such as sea breezes, lake effect snow, and downslope winds, have no hope of being correctly handled by an NWP model unless they span 8 to 10 grid points. In a high-resolution model with, say a ΔX of 10 km, features must be larger than 80 kms to be well resolved and maintained during the model integration!

In Summary

- A key factor in determining whether a feature can be resolved is the feature size compared to grid spacing.

- Atmospheric features must be at least 8 times larger than the grid spacing (ΔX) in order to be well resolved and sustained for a reasonable forecast length.

To learn more about this topic, visit: Horizontal Resolution, a section of the NWP Distance Learning Course

module: Impact of Model Structure and Dynamics.

4. Surface Conditions Are Accurately Depicted

- Misconception

- Surface Fields in Eta

- Surface Fields in GEM

- Comparison of Surface Fields in Eta and GEM

- Example: Greenness Fraction in Eta

- Vegetation's Impact

- Vegetation Effects in a Single Column Model

- Test Your Knowledge

- Reality

4. Surface Conditions Are Accurately Depicted » Misconception

With today's highly sophisticated NWP systems, one would assume that surface conditions are always correctly represented. For example, the model always knows exactly where snow lies on the ground, and it knows the current state of vegetation. It uses analyzed and accurate values of soil moisture, and defines flooded areas of land when they occur.

Not necessarily. Although modern NWP models do have sophisticated treatment of surface conditions, there are situations in which their forecasts will need significant adjustments due to inaccuracies in the specification of initial surface conditions used in the model, or to weaknesses in how the model handles those surface conditions.

4. Surface Conditions Are Accurately Depicted » Surface Fields in Eta

Let's begin by reviewing how two operational NWP models, the American Eta and the Canadian GEM regional, handle vegetation and soil moisture as of spring, 2002.

We'll start with the Eta model.

For vegetation type, a one-degree by one-degree global vegetation type climatology is used. The values for each Eta grid box are taken from the nearest one degree by one degree midpoint. The resolution of this vegetation-type dataset is much coarser than the resolution of the Eta model itself. This might lead to model errors in vegetation type. The vegetation fraction (also known as the greenness fraction) is the portion of each model grid box covered by live vegetation. In the Eta, vegetation fraction data are based on a 1985 to 1989 remote sensing dataset of NDVI (Normalized Difference Vegetation Index) with a resolution of 0.144 degrees. The actual vegetation fraction may be ahead of or behind this climatology.

To specify soil moisture, the Eta land surface is coupled to a four-layer soil model. In the Eta's assimilation cycle, starting 12 hours before its initial time, precipitation analyses using radar and rain gauge data over the continental U.S. and over southern Canada near the Canada-U.S. border are used to "nudge" the Eta's forecast precipitation toward the observed values. The resulting precipitation analysis, which is similar to the observed amounts, then feeds the land surface model. The result is that the soil moisture is more or less anchored to the observed precipitation in the area enclosed by the blue line in the chart you see below. Nowhere is an actual soil moisture measurement used. Over the vast majority of Canada as well as Alaska, precipitation used for soil moisture is provided directly by the Eta model without any "nudging" from observations. In these areas, therefore, the soil moisture is anchored to the Eta forecast precipitation.

4. Surface Conditions Are Accurately Depicted » Surface Fields in GEM

Page 3: Surface Fields in GEM

Now we'll look at how vegetation and soil moisture are treated in the GEM regional model, in which a land surface scheme known as ISBA (Interactions among the Soil, Biosphere and Atmosphere) handles surface processes.

The vegetation type used by ISBA over North America comes from a USGS (United States Geological Survey) climatological database on a 1-km by 1-km grid. It includes 24 vegetation types. Vegetation characteristics such as leaf area index, vegetation fraction and root depth change from day to day in the model according to a pre-established table. The vegetation variables are spatially averaged to provide the GEM model with values representative of its grid areas. Since a climatology is used, the actual vegetation conditions may not match those seen by the model.

ISBA uses a two-level soil moisture model. Once per day, at 00Z, in a technique known as sequential assimilation, errors in GEM's forecasts of air temperature and relative humidity at the two-meter level (the level of the Stevenson screen) are used through an error-feedback procedure to modify the soil moisture fields on the model grid. No actual soil humidity measurements are used in this process.

Both the GEM regional model and the Eta model calculate evaporation and evapotranspiration from the surface as a function of their soil moisture and vegetation characteristics.

4. Surface Conditions Are Accurately Depicted » Comparison of Surface Fields in Eta and GEM

Eta

vegetation

For vegetation, a one-degree by one-degree global vegetation type climatology is used. The values for each Eta grid box are taken from the nearest one-by-one degree midpoint. The resolution of this vegetation-type dataset is much coarser than the resolution of the Eta model itself. This might lead to model errors in vegetation type. The vegetation fraction (also known as the greenness fraction) is the portion of each model grid box covered by live vegetation. In the Eta, vegetation fraction data are based on a 1985 to 1989 remote sensing data set of NDVI (Normalized Difference Vegetation Index) with a resolution of 0.144 degrees. The actual vegetation fraction may be ahead of or behind this climatology.

soil moisture

To specify soil moisture, the Eta land surface is coupled to a four layer soil model. In the Eta's assimilation cycle, starting 12 hours before its initial time, precipitation analyses using radar and rain gauge data over the continental U.S. and over southern Canada near the Canada-U.S. border are used to "nudge" the Eta's forecast precipitation toward the observed values. The resulting precipitation analysis, which is similar to the observed amounts, then feeds the land surface model. The result is that the long-term soil moisture is more or less anchored to the observed precipitation over the continental U.S. and extreme southern Canada. Nowhere is an actual soil moisture measurement used. Over the vast majority of Canada as well as Alaska, the precipitation that feeds the land surface model is provided directly by the Eta model without any "nudging" from observations. In these areas, the long-term soil moisture is anchored to the Eta forecast precipitation.

snow

Snow cover and snow depth for the Eta come from a daily 23-km resolution NESDIS snow cover analysis merged with the daily 47-km resolution AFWA snow depth analysis valid at 18Z. Both are based on satellite observations, and synoptic snow depth data are also used in the AFWA analysis. The AFWA analysis is quality-controlled against the NESDIS snow cover observations for approximately the 18-22Z period, to create a 1/2-degree by 1/2-degree daily snow depth analysis valid at 18Z. This analysis is first used in the 06Z Eta model run, and then in subsequent 12Z, 18Z, and 00Z runs. The snow analysis is therefore 30 hours old by the time it is used in the 00Z Eta run. Snow depth is a dynamic variable in the Eta and can change during the model integration.

ice

The ice coverage analysis in the Eta is based solely on satellite data. It comes from the SAB (Satellite Analysis Branch) and is updated once per day, valid at 00Z, on a 25.4-km resolution polar stereographic grid true at 60 degrees North. This analysis includes data for the Great Lakes. No information on ice thickness is included.

SST

Sea surface temperatures are analyzed on a one-half-degree by one-half-degree grid, using the most recent 24 hours of buoy and ship data as well as satellite-derived temperatures. The 2D-VAR technique used by this analysis has a correlation length such that detail in the SSTs tends to be preserved. This analysis is updated once per day, in time for the 00Z run of the Eta model.

GEM

vegetation

In the GEM regional model, a land surface scheme known as ISBA (Interactions among the Soil, Biosphere and Atmosphere) handles surface processes.The vegetation type used by ISBA over North America comes from a USGS (United States Geological Survey) climatological database on a 1-km by 1-km grid. It includes 24 vegetation types. Vegetation characteristics such as leaf area index, vegetation fraction, and root depth change from day to day in the model according to a pre-established table. The vegetation variables are spatially averaged to provide the GEM model with values representative of its grid areas. Since a climatology is used, the actual vegetation conditions may not match those seen by the model.

soil moisture

ISBA uses a two-level soil moisture model. In a technique known as sequential assimilation, errors in GEM's forecasts of air temperature and relative humidity at the two-metre level (the level of the Stevenson screen) are used through an error-feedback procedure to modify the soil moisture fields on the model grid once per day, at 00Z. This is done over all of North America. No actual soil humidity measurements are used in this process.

snow

The Canadian Meteorological Centre snow depth analysis is driven by precipitation and temperature forecasts from the GEM global model, and incorporates all available snow depth observations. This global analysis is updated every 6 hours, on a 1/3-degree by 1/3-degree latitude-longitude grid. The analysis is interpolated to the model grid to provide its initial snow conditions, which are never more than 6 hours old for any model run. Snow depth is a dynamic variable in GEM regional, and can change during the model integration.

ice

The Canadian Meteorological Centre ice coverage analysis uses SSMI satellite data along with daily ice observations from the Canadian Ice Service. This global analysis is updated once per day on a 1/3-by 1/3-degree Gaussian grid, and incorporates all ice data received during the 24 hour period ending at 00Z. Ice cover observations for 118 selected Canadian lakes from the Canadian Ice Service are also used, but are available only once per week. No ice thickness information is included in this analysis.

SST

The sea surface temperature at the Canadian Meteorological Centre is analyzed on a global latitude-longitude grid with a resolution of 37 km. The analysis is updated once per day, at 00Z, and incorporates data over the previous 24 hours from satellites, ships, and buoys. In the absence of observations, the SST analysis slowly reverts to climatology. This poses no particular problem over the oceans, but may lead to large errors over Canadian lakes in the absence of lake temperature observations. Except over the Great Lakes, such observations are scarce.

4. Surface Conditions Are Accurately Depicted » Example: Greenness Fraction in Eta

How can surface fields impact model forecasts? As an example, let's consider how the Eta model accounts for the vegetation or greenness fraction.

Take a look at this series of images depicting the vegetation fraction across the US, southern Canada, and parts of Mexico and the Caribbean. Specifically, let's zero in on some details in two highly cultivated regions: the Kansas-Oklahoma winter wheat belt and the midwestern corn belt .

In January, much of the area inland and north of 35 degrees is barren except for the winter wheat belt where the winter wheat crop was planted in the late fall.

By April, the wheat belt reaches its peak greenness while most other areas are just beginning to green up. The corn belt is an exception: it remains relatively brown compared to the neighboring areas. These other areas are covered with deciduous trees.

Moving into the summer months, the winter wheat is harvested, leading to a brown down in that area while the forested regions of the central and eastern U.S. reach peak greenness. The corn belt then remains relatively brown until July when there is a sudden explosion of vegetation as the corn grows and ripens.

In the fall the corn is harvested and browns down by October. Meanwhile the forest begins to brown down as well while the winter wheat is planted for next year's harvest and the cycle begins again.

The impact of these seasonal changes in the vegetation greenness fraction can be significant. Next we'll take a look at how theses changes impact the models.

4. Surface Conditions Are Accurately Depicted » Vegetation's Impact

Why do we care about the amount of green vegetation in a numerical weather prediction model? Because it has a significant impact on low level fluxes of sensible and latent heat, which in turn determine low-level atmospheric temperature and humidity.

Green vegetation impacts humidity levels by controlling the amount of evaporation that takes place. It extracts water from sub-surface soil layers, and that water in turn can be transpired during the day into the atmosphere.

Vegetation also impacts surface energy levels. With vegetation present, energy that would otherwise go directly into heating the surface instead goes into evapotranspiration with profound impacts on surface temperature and humidity, and on the planetary boundary layer's temperature, humidity, and static stability.

4. Surface Conditions Are Accurately Depicted » Vegetation Effects in a Single Column Model

To illustrate vegetation's impact on surface temperature, we have taken what is known as a single column model to represent a single grid box and have run two cases for a full diurnal cycle. Single column models are often used to validate the physical parameterizations in NWP models.

In this example, the forcing for each case is identical and comes from observed data from an atmospheric cloud and radiation test bed in the southern Great Plains in June, 1997. The physical parameterization considered is the one used in both the AVN and MRF models. The only difference between the two cases is the amount of vegetation in the grid box. In what we will call a pre-harvest case, the grid box is 90% covered by cultivated vegetation while in the post-harvest case, only 20% of the grid box has green vegetation. The resulting diurnal cycles for surface and near-surface temperatures are shown.

Above is the diurnal cycle for the pre-harvest case. Predicted skin temperature is in gray, 2 meter diagnosed temperature in green, and 995-mb predicted temperature in yellow. Below is the same variables for the post-harvest case are shown.

There is a clear difference in the diurnal cycles between the two cases. The temperatures after harvest are 5 to 6 degrees Celsius higher than pre-harvest temperatures. Differences in moisture and characteristics of the planetary boundary layer, while not shown here, are similarly significant.

Since convection is generally parameterized in NWP models using planetary boundary layer or surface parcel stability parameters, it follows that errors in the model vegetation fraction could result in errors in convective initiation.

It is important for the regional forecaster to know if the actual state of vegetation matches the model's current greenness fraction.. Depending on how closely they match, the model output may or may not need significant adjustments for use in weather forecasts.

4. Surface Conditions Are Accurately Depicted » Test Your Knowledge

Question 1

Question

Fields and forests have greened up much earlier than usual in your forecast area due to very warm and moist spring conditions. How might that affect your prediction of surface parameters for an upcoming warm sector convective situation?

Maximum surface temperature:

The correct answer is d)

In this case, the model has significantly less vegetation than is actually present. As a result, model maximum temperatures will be too warm by several degrees C, as a large portion of the incoming solar radiation in the model will be used to heat the surface rather than for evapotranspiration through the vegetation canopy.

Question 2

Question

Fields and forests have greened up much earlier than usual in your forecast area due to very warm and moist spring conditions. How might that affect your prediction of surface parameters for an upcoming warm sector convective situation?

Boundary layer depth:

The correct answer is b)

Because of the cooler land surface resulting from increased evaporation, the PBL depth will be less than forecast by the model.

Question 3

Question

Fields and forests have greened up much earlier than usual in your forecast area due to very warm and moist spring conditions. How might that affect your prediction of surface parameters for an upcoming warm sector convective situation?

Near-surface winds and turbulence:

The correct answer is b)

The cooler land surface results in less turbulent mixing in the PBL, which in turn reduces downward momentum transfer from levels above the surface where the winds are stronger, so that the surface winds are weaker in this case.

Question 4

Question

Fields and forests have greened up much earlier than usual in your forecast area due to very warm and moist spring conditions. How might that affect your prediction of surface parameters for an upcoming warm sector convective situation?

Relative humidity:

The correct answer is b)

More evapotranspiration from plants means more humidity near the surface and within the PBL. Therefore, model RH is too low in the PBL. Because of weaker turbulence in the PBL due to the cooler surface temperatures, humidity is not mixed upward as far as in the warmer case when few plants are present. This means that the actual RH near and above the top of the PBL will be lower than forecast by the model in this situation.

Question 5

Question

Fields and forests have greened up much earlier than usual in your forecast area due to very warm and moist spring conditions. How might that affect your prediction of surface parameters for an upcoming warm sector convective situation?

In this situation should the probability of convective precipitation:

The correct answer is c)

With full vegetation, low level temperatures will be lower than forecast by the model, but low level humidities will be higher. In terms of convective available potential energy, the two effects act in opposite directions, so the net effect is not clear a priori. What the forecaster can do is to create a modified forecast sounding based on his best estimate of expected low-level temperature and dewpoint, and from this sounding judge the convective potential of the situation.

Question 6

Question

Suppose the ground is bare at 00Z over southern Saskatchewan, but heavy snow falls and is observed to cover the ground at several observing stations in the 01Z to 05Z period. Which is the earliest subsequent run for each of these models that "sees" this new snow?

The Canadian snow depth analysis is updated once every 6 hours. The 18Z analysis is completed before the 00Z model run, and is used in that run. Similarly, the 06Z analysis is used in the 12Z model run. The GEMs are never more than 6 hours behind on the snow depth they "see" at their initial times of 12Z or 00Z. The snow analysis used by the Eta is updated once per day, based on 18Z USAF snow depth data, and quality-controlled by the 18-22Z NESDIS snow cover observations. It is "seen" for the first time by the next 06Z Eta run. This means that for the 00Z Eta run, the snowfall analysis used approaches 30 hours old. Snow in the 01-05Z period will not be "seen" by the Eta until the following 06Z run, over 24 hours later.

Question 7

Question

In which of the models, GEM regional; GEM global; and Eta, is the snow depth a dynamic variable (one that changes with time) during the course of the model integration?

The correct answer is d)

This is an example of the fact that surface processes can be handled differently by different models within their integrations. As of spring, 2002, GEM regional and the Eta both have dynamic snow depths. GEM global, on the other hand, "sees" the initial snow analysis but keeps it constant through its entire integration. This will change in the future when the ISBA system is connected to GEM global.

4. Surface Conditions Are Accurately Depicted » Reality

To summarize, initial surface conditions are not necessarily accurately represented in each model run. This can be due to the use of climatological surface fields as well as factors such as timing, technique, resolution, data availability, and quality control schemes of the routines used to analyze the surface fields.

In addition to potential analysis problems, you need to remember that surface processes are approximated rather than precisely modeled. They can also be highly interdependent. Furthermore, different numerical models not only can use different initial analyses of surface fields, but usually will handle those surface fields differently within their integrations. These facts guarantee that the question of surface fields and processes in NWP models is a highly complicated one.

In general, a forecaster must know how surface field data are collected and incorporated into the NWP models in order to understand how the surface fields may be deviating from reality. This understanding helps to better evaluate the model output and adjust the forecast.

In reality, the latest surface conditions for an NWP model may:

- be based on climatology;

- not match the model grid scale;

- not make it into the current model run;

- not be accurately analyzed;

- not be well-handled within the model integration.

To learn more about the treatment and effects of the various surface fields, see the COMET Web module entitled, Influence of Model Physics on NWP Forecasts (https://www.meted.ucar.edu/nwp/model_physics/navmenu.php?tab=1&page=3-0-0&type=flash)

In addition to the surface field descriptions listed in this page, current information about these and many other model characteristics can also be found in the Operational Models Encyclopedia (https://www.meted.ucar.edu/training_module.php?id=1186) on the MetEd Website.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized

- Misconception

- Convection: Sequence of Events in Nature

- Convection: Sequence of Events in a Model with No Convective Parameterization

- Adjustment Schemes

- Mass-Flux Schemes

- Compensating for Shortcomings of Convective Parameterization Schemes

- Test Your Knowledge

- Reality

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Misconception

Predicting convection and resulting precipitation is an integral part of many forecasts. So you would think that the primary purpose of an NWP model's convective parameterization scheme is to predict convective precipitation.

In reality, precipitation is a by-product of a model's convective parameterization scheme. The real purpose of such a scheme is to release instability so that the models don't predict convection on the grid scale. We wouldn't want the 80km grid AVN producing an 80km wide convective updraft, for example - this would be completely unrealistic.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Convection: Sequence of Events in Nature

Of course NWP models must account for convection in some manner.

To do so they use parameterizations that attempt to reproduce the natural life cycle of a convective event. What might happen in a model that uses no convective parameterization? To answer this question, let's compare a natural convective sequence with the sequence that would occur in a model with no convective parameterization.

First, let's look at the life cycle of a typical convective event in nature as it would appear within a model grid column.

We begin with an unstable sounding favorable for convection. A strong updraft, which occurs in only a small portion of the grid column, quickly transports heat and moisture to the upper troposphere as the cloud builds. Compensating, weaker subsidence outside the updraft--yet still within the grid column--also occurs. Rain falls within a small portion of the grid area, while the rest of the grid area remains precipitation-free. After the convection weakens, rain from stratiform cloud at middle and upper levels may fall. The final result is a stable post-convective atmosphere.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Convection: Sequence of Events in a Model with No Convective Parameterization

Now, let's examine how a model with no convective parameterization handles the same event.

Beginning with the same unstable sounding, the model builds convection using grid-scale vertical velocities. These are very small compared to real convective updrafts--on the order of centimetres per second--so the cloud grows slowly.

One result of this slow buildup is a delay in precipitation onset compared to the natural event. In addition, as things progress, the model creates heavy precipitation across the entire grid box, and the cloud does not extend as high as it would in nature. Also, the whole grid column becomes completely saturated through the depth of the model cloud.

This leads to the release of a large amount of latent heat in the lower and middle troposphere in the mature phase of the convective cloud, which in turn can cause a low pressure centre at the surface.

In the model's post-convective phase, the surface low pressure centre remains and much of the sounding is nearly saturated. These conditions can easily lead to more model precipitation.

Clearly, a model that executes with no convective parameterization scheme has no hope of producing a good precipitation forecast. Worse yet, it can even create an over-developed frontal wave or a surface low-pressure centre that is too deep. NWP models must use convective parameterization schemes if they are to have any hope of avoiding these problems.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Adjustment Schemes

In essence, convective parameterization schemes rearrange heat and moisture to counteract the models' tendencies to create grid-scale convection.

There are two major types of convective parameterizations: adjustment schemes and mass-flux schemes. Let's compare the two, starting with an example of an adjustment scheme.

The Betts-Miller-Janjic (BMJ) adjustment scheme shown here is used in the operational Eta model. In this scheme, model soundings of both temperature and humidity are forced toward reference soundings, which are represented in the skew-T diagram by the blue curves. In an unstable atmosphere such as we have here, the adjustment process causes upward movement of moisture and a reduction in precipitable water. The precipitable water removed from the column must then fall as model precipitation.

This adjustment scheme is fairly crude and has several limitations. First, the reference profiles are fixed, and will usually miss the details of any given situation. Second, the scheme is triggered only for soundings with deep moisture. Third, when triggered, the scheme often precipitates too much, leaving too little humidity for precipitation occurring later or downstream. Fourth, the scheme does not account for capping inversions act to inhibit convection. Finally, it does not directly account for any changes below cloud base.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Mass-Flux Schemes

Most of today's models use mass-flux schemes, which form the second main class of convective parameterizations. GEM regional uses the Fritsch-Chappell mass flux scheme, but this will change in fall, 2002 with the introduction of the Kain-Fritsch mass-flux scheme. The RUC and AVN models also use the Kain-Fritsch scheme.

Mass-flux schemes are designed to stabilize an atmospheric column by reducing the amount of CAPE (Convective Available Potential Energy). The process is depicted in this schematic. Air enters the sub-grid scale updrafts and is rapidly transported to middle and upper levels of the troposphere. At the same time there is compensating subsidence in the environment outside the updrafts, and there are also convective downdrafts.

As air rises in the sub grid-scale updrafts, humidity is removed and then falls as precipitation. Some of this precipitation is evaporated in the downdrafts, which leads to cooling. Entrainment and detrainment also occur. The amount of air processed, which in turn determines the amount of precipitation, is based on the amount of stabilization needed.

The environmental subsidence tends to warm the column at mid and upper levels, while the convective downdraft tends to cool the lower levels. This leads to a more stable model atmosphere, but without the formation of grid-scale convection.

Mass flux schemes are considered to be more realistic than adjustment schemes. Their QPFs can look disorganized due to their triggering of convection in scattered grid boxes. While probably more realistic, such patterns can make model evaluation more difficult. One notable limitation of the Kain-Fritsch scheme is its tendency to develop unrealistically deep saturated layers in active convective areas, so that post-convective stratiform precipitation in those areas may be overforecast.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Compensating for Shortcomings of Convective Parameterization Schemes

Convective parameterization schemes in general have limitations. Different schemes can have very different effects on model soundings, with more or less realism, and those effects are advected downstream. The timing and placement of convection depend on the model's large-scale forcing, its boundary layer forcing and details of the scheme's triggering process, such as the minimum convective cloud depth in mass-flux schemes. Model winds may be indirectly affected by a convective parameterization scheme, but are not directly changed by model convection as real winds are by real convection.

Given the shortcomings of convective parameterization schemes, you cannot rely on the model's convective QPF for precipitation amounts or even convective timing and location. You can evaluate the model's large scale forcing, instability and moisture, and adjust them as necessary to define potential convective areas. Your knowledge of smaller-scale effects, such as boundary layer details, can be used to refine the forecast. Remember that convection in a given region of the model causes significant changes to its atmosphere in that region and downstream--just as real convection does in the real atmosphere--but the model changes may be very different from the actual changes in nature. Such model changes can therefore reduce the usefulness of model diagnostics such as frontogenesis and PV. However, your knowledge of the limitations and biases of the convective parameterization scheme you are working with may help you to further refine the forecast.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Test Your Knowledge

Question 1

Question

Which of the following statements about CP schemes in general are true?

The correct answers are a), c), and e)

CP schemes were designed to release instability, not to forecast convective precipitation. Condensation and precipitation are created as by-products of the schemes' acting to remove the instability.

Most schemes do not directly modify the horizontal wind field.

Real convective cells have significant updrafts and downdrafts, but CP schemes do not directly alter the vertical motion field. However, future very high-resolution non-hydrostatic models with explicit convection (and so no need for a CP scheme) will be able to directly modify both the horizontal and vertical motion fields.

Question 2

Question

Which of the following statements about particular CP schemes are true?

The correct answers are c) and e)

The BMJ technique uses reference profiles, and one of its limitations is that it does not account for the inhibiting effect of capping inversions. The Kain-Fritsch scheme is a mass-flux scheme in which stabilization of the atmospheric column is achieved through reduction of CAPE. Among its advantages are the facts that this scheme accounts for capping inversions, and also it includes downdrafts with associated cooling near the surface.

5. CP SCHEMES 1: Convective Precipitation is Directly Parameterized » Reality

To summarize, the primary purpose of convective parameterization schemes is not simply to predict convective precipitation.Rather, it is to release instability so that the models don't produce grid-scale convection and its associated adverse impacts.

Convective precipitation is a necessary by-product of these schemes. For this and other reasons, convective precipitation is notoriously difficult for numerical models to predict. In general, the forecaster cannot rely on the model's convective QPF for precipitation amounts or even location and timing of convection. Modifications to the model's forecast are often necessary.

Reality:

- Convective parameterization schemes are designed to release model instability.

- Convective precipitation is a necessary by-product of the convective parameterization.

- The forecaster often must make significant changes to the model's convective QPF.

To learn more about this topic, visit: Convective Parameterization a unit in the module: How Models Produce Precipitation and Clouds, part of the NWP Distance Learning Course.

Also, don't miss CP Schemes 2: A Good Synoptic Forecast Implies a Good Convecective Forecast, one of the other misconceptions in this series.

CP Schemes 2: A Good Synoptic Forecast Implies a Good Convecective Forecast

6. CP SCHEMES 2: A Good Synoptic Forecast Implies a Good Convective Forecast

- Misconception

- Model Grid Scale vs Reality

- Fine Tuning CP Schemes

- Overactive CP Schemes

- Underactive CP Schemes

- Different Schemes in the Same Model

- Test Your Knowledge

- Reality

6. CP SCHEMES 2: A Good Synoptic Forecast Implies a Good Convective Forecast » Misconception

Suppose an NWP model has a good synoptic forecast, with accurate large-scale forcing and pre-convective soundings. It follows that convection and all its effects will also be adequately forecast.

Not at all.

Even if the model is performing well at the synoptic scale, it will not necessarily do a good job on weather elements influenced by convection such as precipitation, temperature, humidity, wind, and pressure. There are a variety of reasons for this. Let’s explore some of them.

6. CP SCHEMES 2: A Good Synoptic Forecast Implies a Good Convective Forecast » Model Grid Scale vs Reality

One key reason is that the convective parameterization schemes of current operational NWP models work at the scale of the model grid, while convection in nature occurs at much smaller scales. In effect, the model’s convective parameterization scheme must “invent” information at these smaller scales. A related problem is that the initial analyses used by the models can miss existing atmospheric details important for convection, due to the fine scale of those details.

Consider a model with a 15-km grid spacing. Each 15 x 15 km grid box holds only one value per layer for temperature, humidity, and winds, while convection in the atmosphere occurs at much smaller scales. So the model would really need many more small grid boxes to have any chance of depicting convection at the proper scale.

For example, in some situations boundary layer rolls are a critical element in convective initiation. A 1-km grid model can resolve such features and thus can initiate the associated convection.

While there is no guarantee that all the forecast details of this convection will be correct in the 1-km model, clearly a 30-km model or even a 15-km model has no chance whatsoever of resolving the physical processes involved in creating such convection. This point is illustrated schematically in the graphic.

6. CP SCHEMES 2: A Good Synoptic Forecast Implies a Good Convective Forecast » Fine Tuning CP Schemes

Another complicating factor in modeling convection and its effects is the difficulty in adjusting convective parameterization schemes to work as well as possible within the model for all weather situations. These schemes try to model atmospheric processes and use parameters related to these processes that can be adjusted, or tuned. Typically, sensitivity experiments are conducted and the parameters are tuned to give what is considered the “best” result over a variety of cases. Compromises are inevitable, though, and the chosen tuning will not be the best for every situation.

To illustrate this point, let’s consider some results from the GEM regional model with a 16-km grid and the Fritsch-Chappell convective parameterization. We’ll look at forecast precipitation in the 12-36 hour period from two model runs initialized at 1200 UTC on 4 August 1998.

The only difference between the two runs is in the tuning of the Fritsch-Chappell scheme.

In these images, the numbers in magenta are the observed precipitation in mm at various locations. The model QPF is represented by thin dashed or solid red contours, with values of 0.2, 0.5, 1, 5, 10, and 25 mm. Amounts above 5 mm are emphasized through shading in three different colors. The small boxes are labels for some of the QPF contours.

In the first run, the scheme’s trigger function was programmed with a less selective value: the threshold vertical velocity at cloud base was relatively low. In the second run, a more selective value, with a higher threshold vertical velocity, was used.

The differences are striking. The first run generally has more precipitation over a wider area than the second, which is expected since the scheme was able to convect more easily in the first case. Neither result is perfect. For example, observed precipitation amounts of 7, 15, 3, and 2 mm over northeastern Alberta and northern Saskatchewan were missed by the second run. Even though the first run did over-forecast amounts in this area, it provided a better “signal” for what happened there. On the other hand, in other areas such as southeastern BC, the first run was clearly too generous with its precipitation, while the second run was somewhat better with lower forecast amounts. But note that exactly the opposite was true over southeastern Manitoba. There is no single ideal solution, but one single value of the threshold vertical velocity must be chosen for the scheme when it is implemented in an operational model.

6. CP SCHEMES 2: A Good Synoptic Forecast Implies a Good Convective Forecast » Overactive CP Schemes

Another potential problem is that in some situations the model can incorrectly forecast convection because its convective parameterization scheme is either under- or overactive.

This graphic represents what happens if the scheme is overactive, which means that it has an excessive response to some model forcing.